Looking at TDD: An Academic Survey

by Ted M. Young

In which I discuss TDD in the context of opinion and academic papers.

I've been reading lots and lots (no really, hundreds -- you should see my Zotero) of academic papers over the past year or so. Most of these have been in the area of Learning Science (so that I could be a better teacher in my Java training classes). But I've always been a researcher, ever since I was a kid reading about the best way to calculate moving averages for stock charting (yeah, I had an odd childhood). I hit the jackpot in my university days where I had access to that secret special library where they held the stacks of the computer science related journals (yes, stacks of printed journals, in the days before the web) that weren't available in the regular library.

Nowadays it's so easy to find and read research papers, rather than relying on handed-down wisdom, or opinion, or "best practices". Granted, academic papers can be hard to read and evaluate, and not everything is easily researched or experimented on, for reasons that I might get into at some other time, but the attempt must be made, otherwise we end up with people writing blog posts or articles that are based almost purely on someone's judgment and opinion.

The rest of this post is based on tweetstorm of mine, but due to popular request, I'll be writing more articles that dive into specific TDD-related papers. If you have any specific requests, go ahead and ask me on Twitter to @jitterted.

And So It Begins

The tweetstorm started because I read a post on TDD that was clearly biased against TDD, and I tweeted:

Some days I'm really frustrated by people holding opinions in the face of experiments and data showing the opposite. It's one thing to say that the experiment isn't valid (and then please say why you think so), it's another to misread it entirely.

— Ted M. Young (dev guacamole) (@jitterted) July 5, 2018

The post I was referencing is titled "Lean Testing or Why Unit Tests Are Worse Than You Think" (by Eugen Kiss). Just from the title, I knew it was trouble (the "Lean Testing" doesn't help), but I try to approach things with an open mind, even if I disagree, because I've often been wrong before.

As I read the post, I ran into a reference to some academic papers, and thought that the author was going to cite data from those papers to support their point, but was not just dismayed, but a bit angry. First the Microsoft Research article (not a paper) was mentioned in the context of code-coverage. I generally agree with the idea that 100% code coverage is not worth the cost. However, Kiss's "Additional Notes" section dives into TDD:

There is research that didn’t find Test Driven Development (TDD) improving coupling and cohesion metrics

Which is somewhat misleading (and a bit confusing). The Microsoft article quotes one of the researchers, Nagappan, as saying (emphasis added):

What the research team found was that the TDD teams produced code that was 60 to 90 percent better in terms of defect density than non-TDD teams.

So by cherry-picking the coupling and cohesion metrics comment (which isn't quite right either, but I'll get to that), it seems as if TDD isn't worth it. Clearly Nagappan, et al, found otherwise, something that Kiss never mentioned.

The other paper referenced by Kiss is not a traditional paper, but an article in the IEEE Software journal: "Does Test-Driven Development Really Improve Software Design Quality?" [Janzen & Saiedian, 2008]. Did Kiss read the paper? Because the conclusion states:

Our results indicate that test-first programmers are more likely to write software in more and smaller units that are less complex and more highly tested.

And isn't that what we want? They did say that some benefits in terms of higher cohesion and lower coupling were difficult to confirm. But with respect to coupling, the authors state that

some coupling can be good, particularly when it’s configurable or uses abstract connections such as interfaces or abstract classes. Such code can be highly flexible and thus more maintainable and reusable.

and then go on to say

Our evaluation [doesn't] give a conclusive answer to whether the test-first approach produces more abstract designs. However in most of the studies, the test-first approach resulted in more abstract projects in terms of [certain abstraction metrics].

When it comes to cohesion, the authors state

Cohesion is difficult to measure.

At least via calculated metrics. The problem here is that the metric (LCOM5 in this case) counts accessors (getter/setter), which I'm strongly against in Java anyway, so without looking at the code, it's hard to conclude anything about cohesion in TDD vs. Test-Last. However:

The ITF-TL study had the most striking difference with nearly 40 percent of the methods in the test-last project being simple one-line accessors. In contrast, only 11 percent of the test-first methods were simple accessors.

So if nothing else, the Test-First project had many fewer one-line accessors, which in my mind is a good thing!

Kiss goes on to reference several other blog posts that attack TDD and/or unit testing, but those posts have already been discussed elsewhere, so I'll not go over those again.

A TDD Survey Paper

At this point, with my researcher hat firmly in place (I really do need to get such a hat), my tweets dived into a survey paper "Considering rigor and relevance when evaluating test driven development: A systematic review" [Munir, et al, 2014]. Survey papers in academia are where the researchers search for all papers on a topic (so much easier these days!), and then using various methods (which are explicitly defined in the paper), narrow them down, then use the final set to support -- or not -- their research questions. Survey papers are great for those wanting to survey the field to see what's what, hence the name.

Let's look at a table sorting some of the papers they surveyed:

Ignoring productivity (which I partially attribute to inexperience or poor training, and otherwise to how its measured -- a whole other topic, for sure), everything else is in favor of TDD, specifically:

With respect to external quality we can see that studies are only positive for TDD, with 7 studies supporting this finding.

Two studies indicate that code complexity is reduced using TDD.

That table is for papers found that the survey classified as "high rigor" (where "rigor is concerned with adhering to good practices when applying a research method and reporting on them") and "high relevance" (where "relevance considers whether the results apply to an industrial context", i.e., is more like a real-world setting).

The next tweet (darned typo)...

Even the "low relevance" (but stil "high rigor") studies indicate that you are pretty likely to do better, and possibly no difference, so why not do #TDD? pic.twitter.com/ol3x1VOQnC

— Ted M. Young (dev guacamole) (@jitterted) July 5, 2018

Refers to this table:

So this means even in more artificial settings, the results are either in favor of TDD, or there's no difference, with a few papers having internal conflict. More precisely:

Results for external quality are conflicting, with 3 studies supporting an improvement in external quality, 6 studies showing no difference, and 1 study indicating negative results. Internal code quality only has 1 study with positive results, 10 studies indicate no difference.

Digging Into An Industrial Empirical Study

From there, my tweets dive into a paper from 2015: "Towards an operationalization of test-driven development skills: An industrial empirical study" [Fucci, et al] (btw, "Towards" is a popular academic title starter). This paper mentions that most empirical studies "tend to neglect whether the subjects possess the necessary skills to apply TDD." So with more/better training in this complex skill, folks could do even better.

This study is interesting, in that it's qualitative/ethnographic and highlights difficulties in doing TDD, e.g., it's not test-first that's hard, it's "switching from a plan-intensive mindset to a lightweight and flexible one", which is a great point.

Just doing the tests first, in other words, is the easy part, but if you've already fully mapped out and planned the implementation in your head, you're not really doing TDD, you're just doing Test-First. And if you've ever heard me talk about TDD, you know that TDD != Test-First. The goal of TDD is not just ensuring test coverage, but providing a step-by-step way to help you design your code.

The study also shows that when learning TDD, you need to also be learning Refactoring, otherwise you're not getting the real benefit of your new-found knowledge about the code and applying that knowledge by refactoring. This is a common oversight when I see discussions of TDD, because to do TDD well, you have to know how to refactor, which requires that you have a sense of good design.

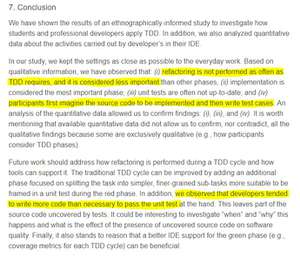

Here's the conclusion from the paper, with highlights that would not surprise anyone who knows or teaches TDD:

That last highlight is why TDD teachers harp on taking such small steps, because it's so damned easy to write more code than is needed, thinking I know I'll need to check for this condition later, so let me just write that code now, which not only "leaves part of the source code uncovered by tests" (from the conclusion above), because that'd be easily remedied by just writing some more tests, it's that it may be the wrong design, as in, the code might be in the wrong place, it might not be the right code, etc., but you won't know because you came at it from a code-first point of view.

Contact me on Twitter @Jitterted if you want to discuss this, make suggestions for papers to look at, or (shameless plug) talk to me about the Java training that I do.